Exploring AI coding tools

In recent years, AI coding tools for software engineers have rapidly improved. Tools like GitHub Copilot, which has been around for over three years, are becoming an integral part of many developers’ workflows. However, the question remains: Do these tools really provide value? While I’ve seen the rise of these technologies, it wasn’t until last year that I had the opportunity to use them in my day-to-day work. After experimenting with Google’s Gemini coding assistant at work, I decided to explore and compare a few different AI tools in my own time.

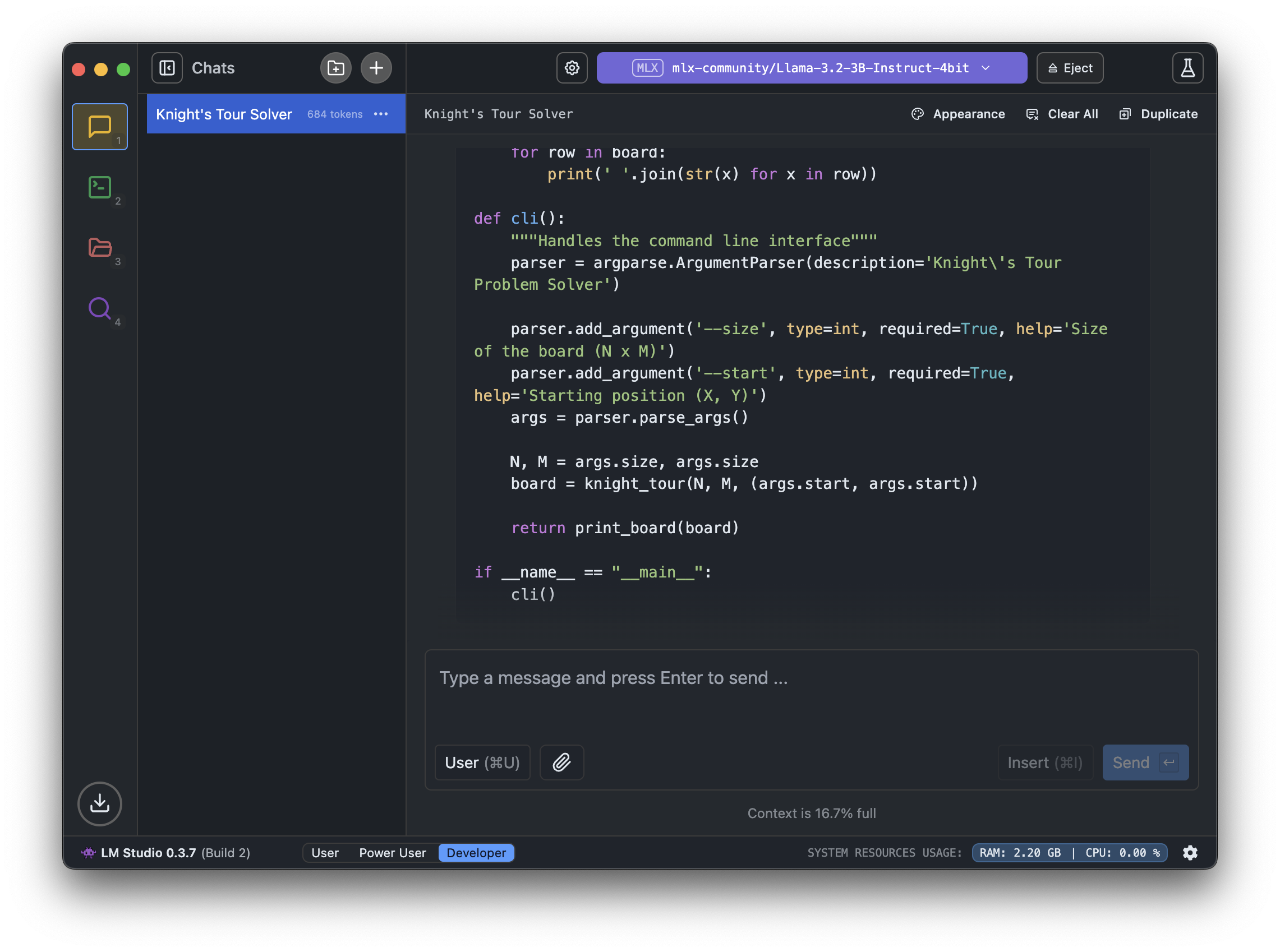

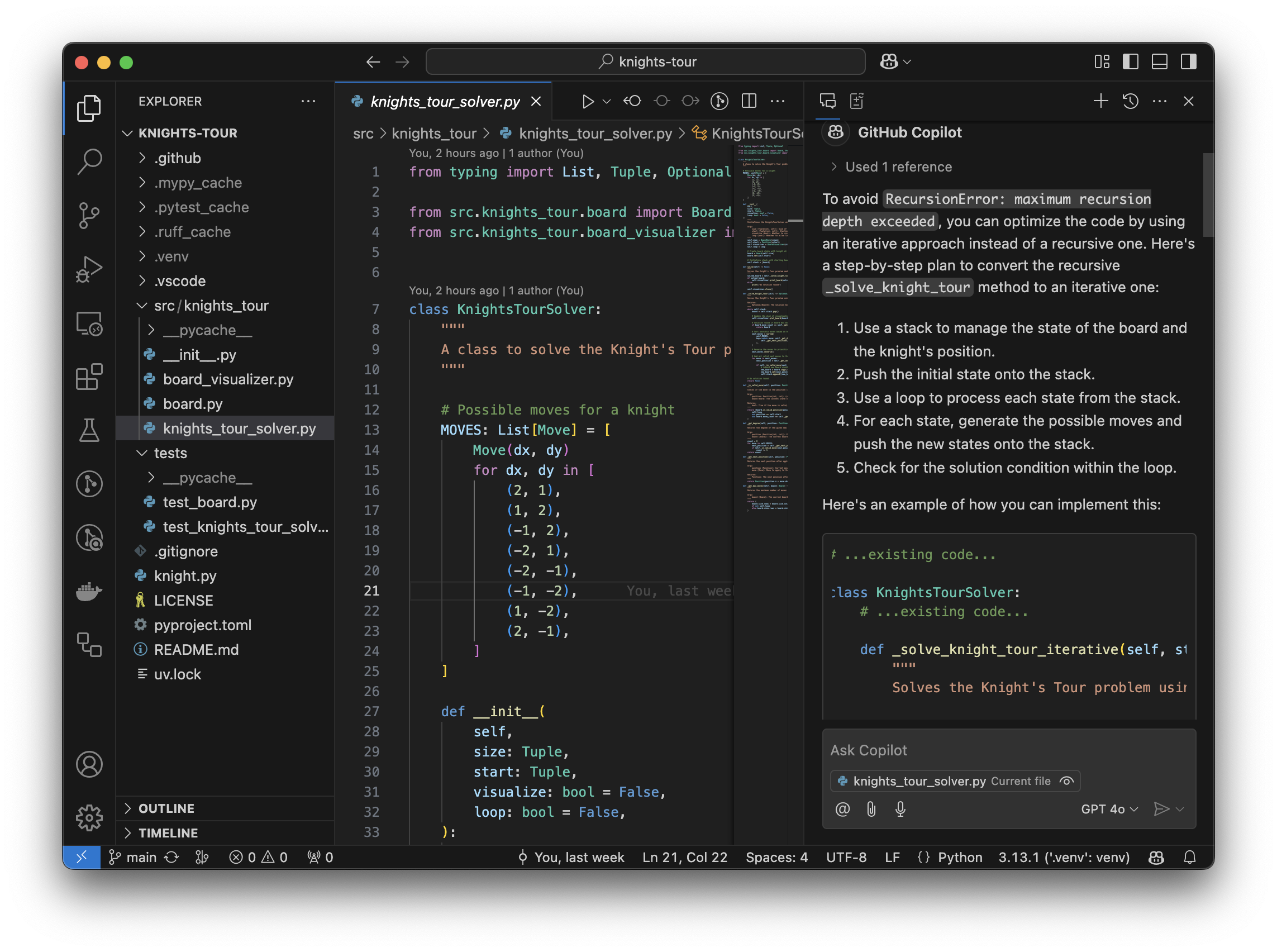

For my experiments, I chose GitHub Copilot (which is powered by OpenAI’s GPT-4o model), as well as a local model I had access to via LM Studio: Llama-3.2-3B-Instruct-4bit. While the Llama model is less powerful than Copilot’s underlying GPT-4o model, I thought it would be a useful point of comparison, especially since I was able to run it locally on my MacBook.

To make this comparison meaningful, I created a small Python CLI app to solve the classic Knight’s Tour problem — a well-known backtracking problem in mathematics and computer science. The goal is to move a knight around a chessboard, visiting each square exactly once. It’s a problem often used to evaluate algorithmic problem-solving and the capabilities of AI in programming tasks.

Basic Problem Solving

The first phase of my experiment was to test whether these AI tools could help me write a basic Python script to solve the Knight’s Tour problem. I started by providing both tools with a simple prompt to get them started.

GitHub Copilot: Copilot quickly generated a full solution, which, after a few rounds of prompting, resulted in code that worked without major issues. It was impressive how quickly it tackled the problem and provided a working solution.

Llama (via LM Studio): The local Llama model was able to give me some basic structure for the code but struggled to generate a complete, functional solution. While it suggested some useful steps, it failed to produce a working result on its own, dispite multiple rounds of prompting.

This initial comparison made it clear that while both tools could help get started, GitHub Copilot was far better at generating a comprehensive, working solution on the first try. However, there was still room for improvement in terms of code quality, as I’ll explain below.

Code Optimization and Performance

Once the basic solution was in place, I moved on to testing how well the tools could help improve the performance and structure of the code. Specifically, I wanted to see if they could suggest optimizations, such as using Warnsdorf’s Rule, which is a heuristic for solving the Knight’s Tour problem more efficiently by reducing the number of moves at each step.

GitHub Copilot: Copilot was quick to suggest using Warnsdorf’s Rule to optimize the solution. It even provided working code to implement the rule, significantly improving the performance of the initial solution. This was a notable strength of Copilot: its ability to incorporate optimization techniques with minimal prompting.

Llama (via LM Studio): The Llama model also suggested using Warnsdorf’s Rule, but it wasn’t able to provide working code for this implementation. The suggestions were useful in theory, but they didn’t translate well into a functioning solution. This gap highlights the limitations of running a smaller model, like Llama 3.2, especially for more complex tasks.

Takeaways: Code Quality and AI Limitations

Although tools like GitHub Copilot can generate full working solutions, there are still several challenges when it comes to code quality. Here are some important takeaways from my testing:

Code Readability: The solution generated by Copilot was functional, but it wasn’t particularly readable or maintainable. Variables were sometimes poorly named, and the structure of the code could have been improved for clarity. I had to manually refactor the code to make it more understandable, which is something that a more experienced developer would have to do even after Copilot’s suggestions.

Optimization Limitations: While Copilot was able to suggest and implement an optimization like Warnsdorf’s Rule, the Llama model struggled to generate even basic optimization suggestions, let alone implement them. This shows that while AI tools are helpful for basic tasks, they still struggle when the complexity increases, and they often fall short in terms of delivering more sophisticated solutions.

Handling Code Across Multiple Files: One issue I encountered with GitHub Copilot was its integration with VS Code. While Copilot works well for generating code in a single file, it struggles to provide useful suggestions when the code spans multiple files or requires more context. In large projects, this could be a major limitation, as maintaining the right context across files is key to writing clean, cohesive code.

The Bigger Picture: AI Tools in Software Development

This experience aligns with what I’ve seen from other AI coding tools I’ve used professionally, like Google’s Gemini. While these tools can be helpful for providing starting points and solving simple problems, they often falter when the problem complexity increases. AI can assist in generating basic boilerplate code or solving straightforward problems, but they still need significant improvements to handle more complex tasks effectively.

One of the key areas where I see AI tools improving in the future is in their ability to handle larger codebases and more sophisticated optimization tasks. However, at this stage, I believe these tools are most useful for routine, repetitive tasks rather than high-level programming. For example, AI tools could be used for generating basic code scaffolding, writing unit tests, or solving well-defined, standard problems like sorting or searching algorithms. But when it comes to more complex, domain-specific code or nuanced problem-solving, developers will likely need to rely on their own expertise for now at least.

Conclusion: What’s Next for AI Coding Tools?

After using GitHub Copilot and the Llama model, I’m optimistic about the future of AI-assisted coding but believe we’re still in the early stages. While these tools can provide value for basic tasks, they’re not yet ready to replace the nuanced judgment and problem-solving skills of human developers. I’m excited to see how these tools will evolve over time. As AI models get more powerful and better at maintaining context across files, I imagine they’ll become more useful for larger, more complex projects.

For now, I’ll continue to write most of my own code, but I’ll definitely keep an eye on AI tools as they continue to improve. It’s clear that they have the potential to significantly augment software development, even if we’re not quite there yet.